About Me

I am a research engineer at LG Electronics CTO Division. I am currently working on a AI aided vehicle system at automotive & business solution center. My interests are perception algorithms and optimization on edge device for intelligent vehicle.

I am most skilled in: Deep Learning and Computer Vision for Autonomous driving.

Programming Language & Framework : C/C++ Python Tensorflow Pytorch Caffe TensorRT SNPE CUDA OpenCL

Education

Jeju Science high School

2008 Mar - 2010 Feb

Early graduation

Loved physics.

KAIST

Bachelor's degree

2010 Feb - 2015 Feb

Aerospace engineering department

Self-studied programming. Became interested in the autonomous driving.

UCLA

Summer session

2011 Jun - 2011 Aug

Mathmatics department

Studied differential equation & algebra.

NYU

Intensive english program

2013 Sep - 2013 Dec

English language institute

Studied english, enjoyed NYC.

KAIST

Master's degree

2015 Mar - 2017 Aug

Unmanned System Research Group @ Aerospace engineering department

Designed and developed DNN-based perception system for the KAIST autonomous vehicle ‘Eurecar’. Developed perception algorithms for mobile robots.

Experience

Unmanned System Research Group

Designed and developed DNN-based perception system for the KAIST autonomous vehicle ‘Eurecar’. Developed perception algorithms for mobile robots.

Smart Mobility Lab. @ CTO Division

Developed DNN-based perception algorithms for autonomous driving & AR navigation.

Projects

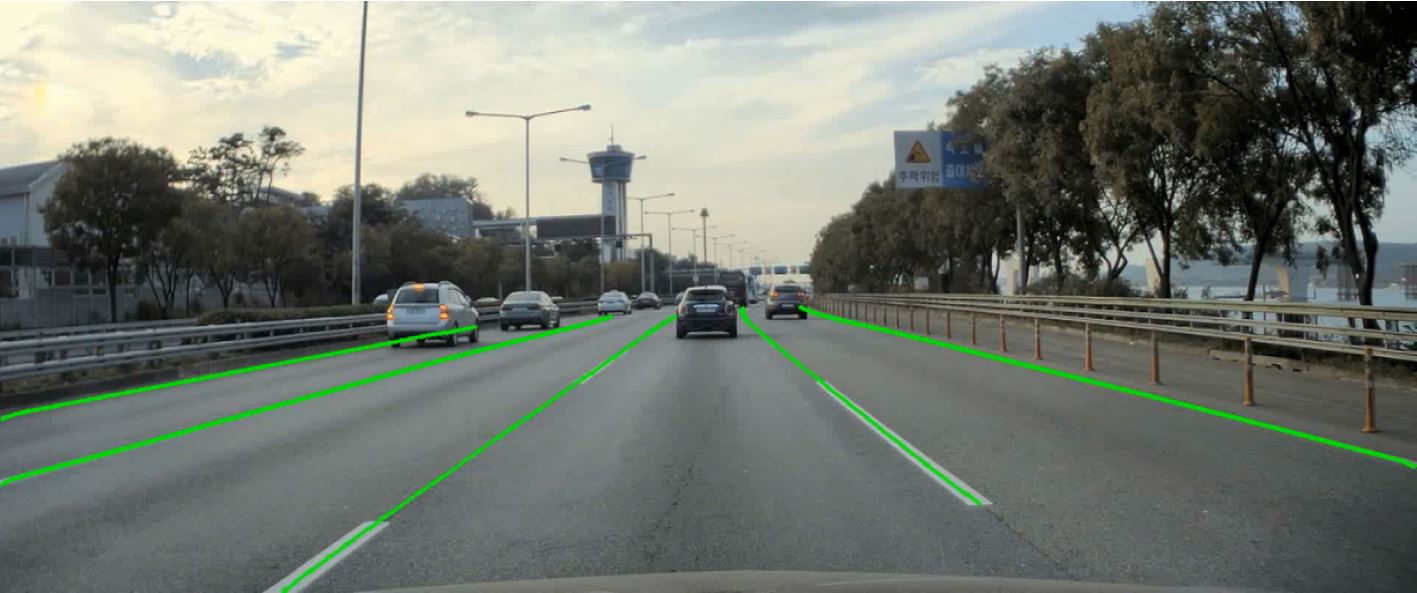

Perception for autonomous driving & AR navigation

(2020 @ LGE) Developed state-of-the-art lane detection for autonomous driving & AR Navigation.

I proposed a fast and robust lane detection method based on an instance segmentation approach. This method achieved global rank 3 on the Tusimple Lane Detection Benchmark(link) and also can run at real-time speed on mobile phone. My paper has been accepted in ECCV 2020 Workshop on Perception for Autonomous Driving. I implemented GPU/DSP/NPU runtime based DNN application by using Android, C++JNI, OpenCL and Snapdragon Neural Processing Engine SDK(SNPE).

Autonomous driving in urban

(2018~2019 @ LGE) Developed perception algorithms by using multiple sensors.

We developed an autonomous vehicle capable of driving in an urban environment. I developed lane detection, freespace detection and occupancy grid mapping ROS packages, and used several cameras and lidars by sensor fusion. I designed a single DNN network that is jointly trained on independent datasets, and accelerated it using TensorRT and CUDA on the NVIDIA platform. During this period, I have grown my ability to implement the SOTA deep learning papers in various frameworks (Caffe, TensorFlow, Pytorch).

EureCar : The self-driving car for urban driving and racing

(2015~2017 @ KAIST) Developed deep learning based perception system and end-to-end driving

I was a lead perception engieer of ‘Eurecar’, a representative self-driving car of KAIST. I designed a vision system using cameras and lidars. We demonstrated an advanced autonomous driving by applying the cutting edge deep learning. In more detail, I used semantic segmentation and object detection to recognize the driving environment, and convert this information into vehicle coordinates to be used for autonomous driving. In addition to research on perception, I also participated in the development of Eurecar’s end-to-end self-driving research. During these deep learning studies, the application was implemented using the Caffe framework on the NVIDIA platform (Gerforce series, DrivePX2, Jetson series).

- Papers :

- Seokwoo Jung, “Development of driving environment recognition system for autonomous vehicle using deep learning based image processing”, KAIST Master thesis, 2017

- Unghui Lee, Jiwon Jung, Seokwoo Jung, David Hyunchul Shim, “Development of a self-driving car that can handle the adverse weather”, IJAT, 2018

- Chanyoung Jung, Seokwoo Jung, David Hyunchul Shim, “A Hybrid Control Architecture For Autonomous Driving In Urban Environment”, ICARCV, 2018

- Seokwoo Jung, Unghui Lee, Jiwon Jung, David Hyunchul Shim, “Real-time Traffic Sign Recognition system with deep convolutional neural network”, URAI, 2016

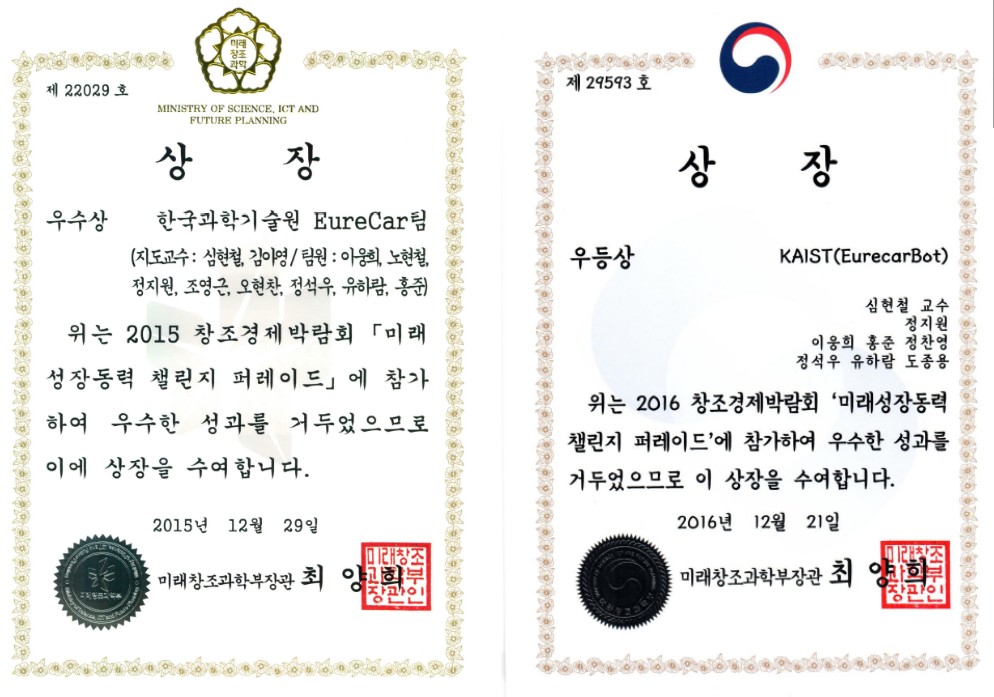

- Awards : 2015 Minister’s(Science and ICT) award, 2016 Minister’s(Science and ICT) award

- Videos :

- Autonomous driving in license test course link1, link2(GUI application view)

- Autonomous driving in racing circuit link

- Autonomous parking link

- End-to-End driving link

- News & Press : link1, link2(Discovery channel canada)

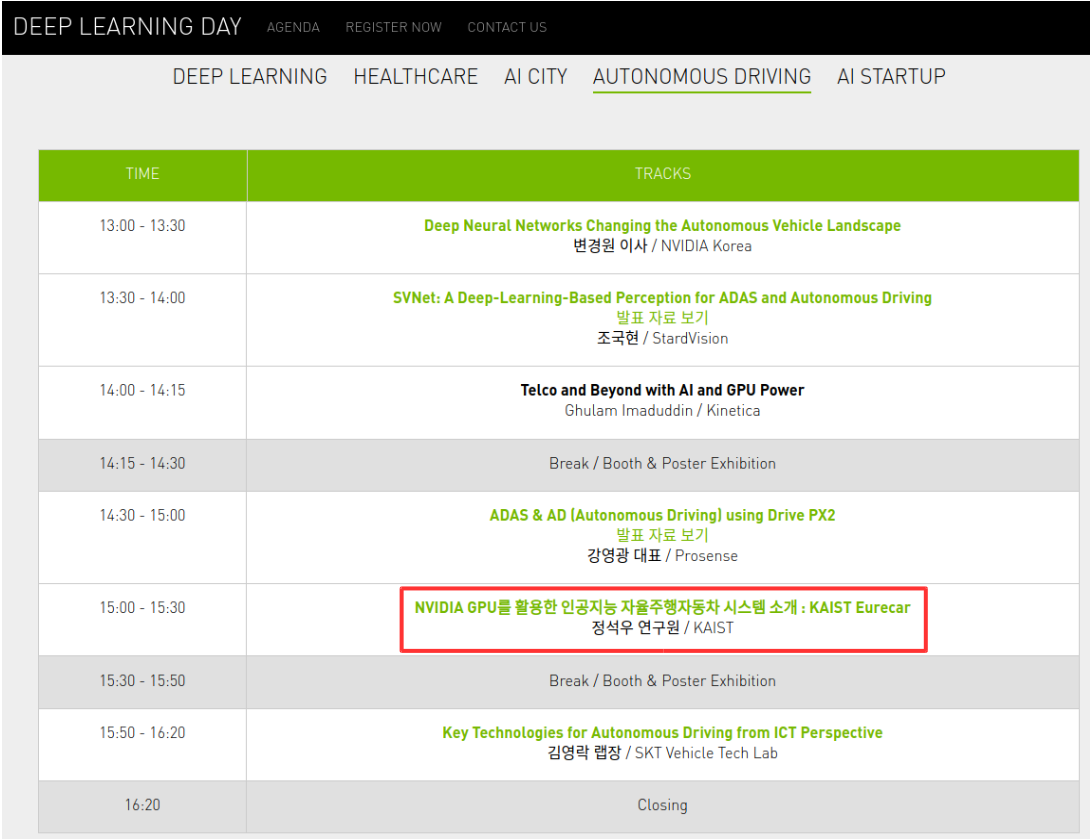

- I presented our self-driving car research achievements at NVIDIA Deep Learning Day 2017.

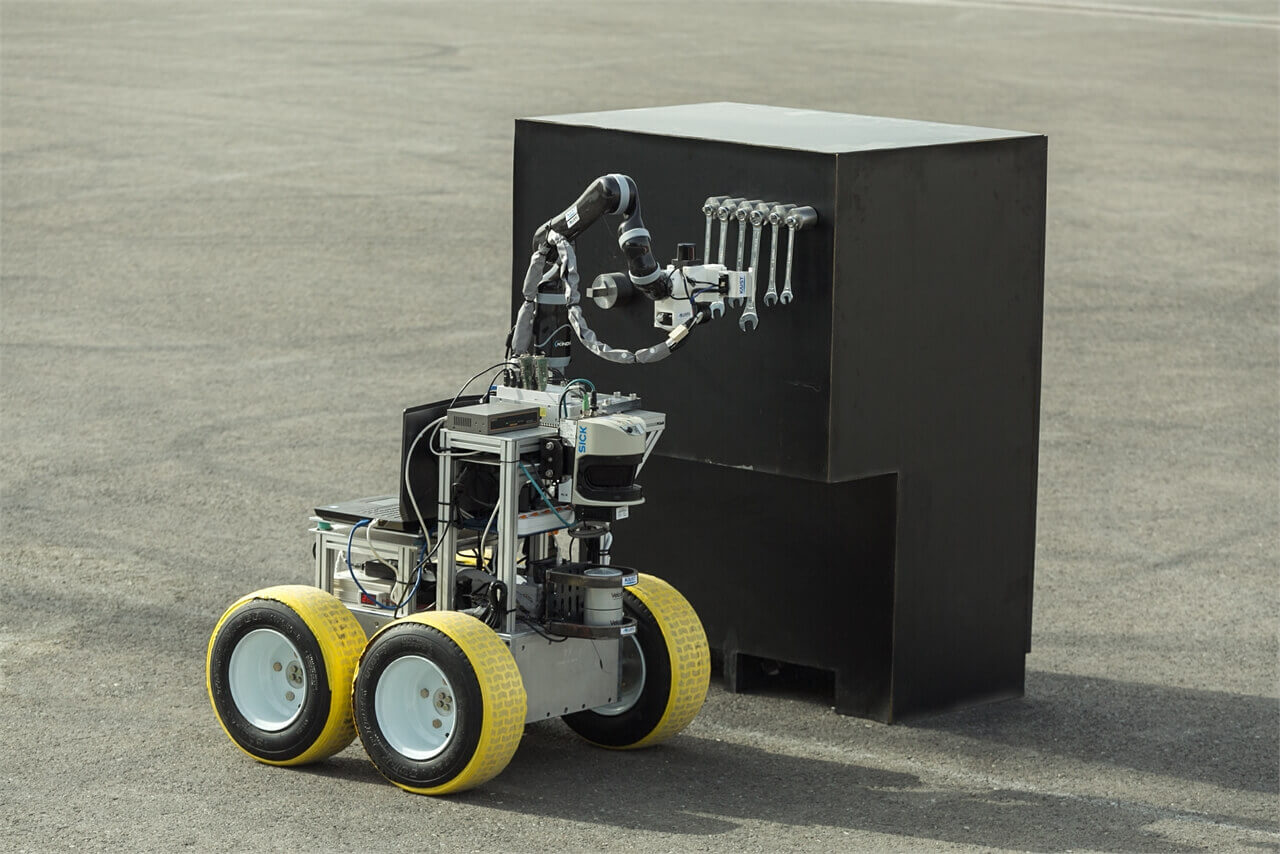

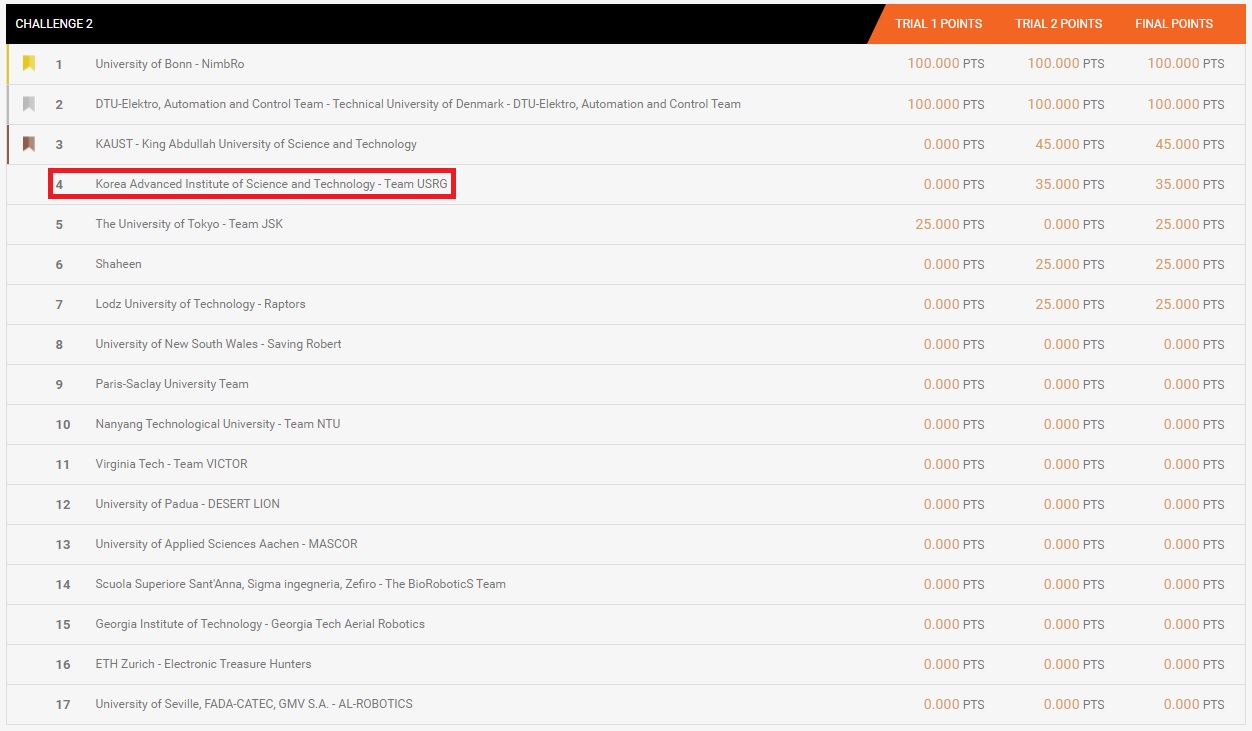

International Robotic Challenge (MBZIRC) 2017

https://www.mbzirc.com/challenge/2017(2017 @ KAIST) Developed self-navigating, deep learning based visual servoing for UGV robot.

I was a lead perception engineer of KAIST team on MBZIRC 2017 Challenge 2. The challenge requires an UGV to locate and reach a panel, and physically operate a valve stem on the panel. I developed self-navigating algorithm using lidars, and also developed visual servoing & wrench detection algorithms by using DNN. In MBZIRC 2017, 45 finalists were chosen from over 316 teams from 47 countries. Our team ranked 4th in the challenge 2, and successfully completed all UGV missions in the grand challenge.

- Paper : Jun Hong, Seokwoo Jung, Chanyoung Jung, Jiwon Jung, David Hyunchul Shim, “A general‐purpose task execution framework for manipulation mission of the 2017 Mohamed Bin Zayed International Robotics Challenge”, Journal of Field Robotics, 2019

- News & Press : link1(eng), link2(kor)

- Documentary : KBS 길에서 만난 과학 4회, 5회, 6회, 7회

- Source code : github repo link

A Little More About Me

I am ready to dedicate myself to research for autonomous driving.